Scared sad girl bullied on line with laptop suffering cyberbullying and harassment feeling desperate and intimidated. Child victim of bullying stalker social media network

Introduction:

With only a click or press, users can access an almost limitless amount of data and information on the internet. Its progress, however, has much outpaced this era in today’s world. In addition to being a spot for communication, it is also a place for business and entertainment.

When people use the Internet, they are also enjoying the services and conveniences it brings. Artificial intelligence is a big issue right now, which has turned science fiction into reality, increasingly coming into public view and influencing and changing people’s lives and ways of thinking. In 2016, an artificial intelligence (AI) product called AlphaGo which was developed by a team led by DeepMinda’s Demis Hassabis came out. And it beat Ke Jie who was the No. 1 player in Go level in May 2017. Examples of this on our daily life include the translation software Deep L and the question-and-answer artificial intelligence program ChatGPT.

What Is AI? Neither Artificial nor Intelligent. One of the most popular textbooks on the subject says It’s a “reliability and competence of codification can be produced which far surpasses the highest level that the unaided human expert has ever, perhaps even could ever attain.”(Crawford,2021).

In fact, the use of AI in daily life is already more widespread than we think. For instance, the face recognition technology is most widely used in attendance access control, security and finance, while logistics, retail, smartphone, transportation, education, real estate, government management, entertainment advertising, network information security and other fields are starting to get involved. (Lixiang,2020).

It represents a great progress of artificial intelligence technology Face recognition has seen significant advancement with the assistance of deep learning, surpassing human performance. Deep learning has also demonstrated its potential in related fields, such as aerial activity detection emotion-driven facial expression recognition showcasing its versatility(B Huang,2025)

For this article, I would like to start from the news surrounding AI face-swapping technology in 2025, to make people aware of the severity of the impact of this technology on society.

Face-Swapping technology

The modern world is populated by fake faces. No longer confined to portraiture and sculpture, human simulacra are increasingly life-like, including android robots built to mimic human gaze, speech, and emotion (MacDorman, 2016).

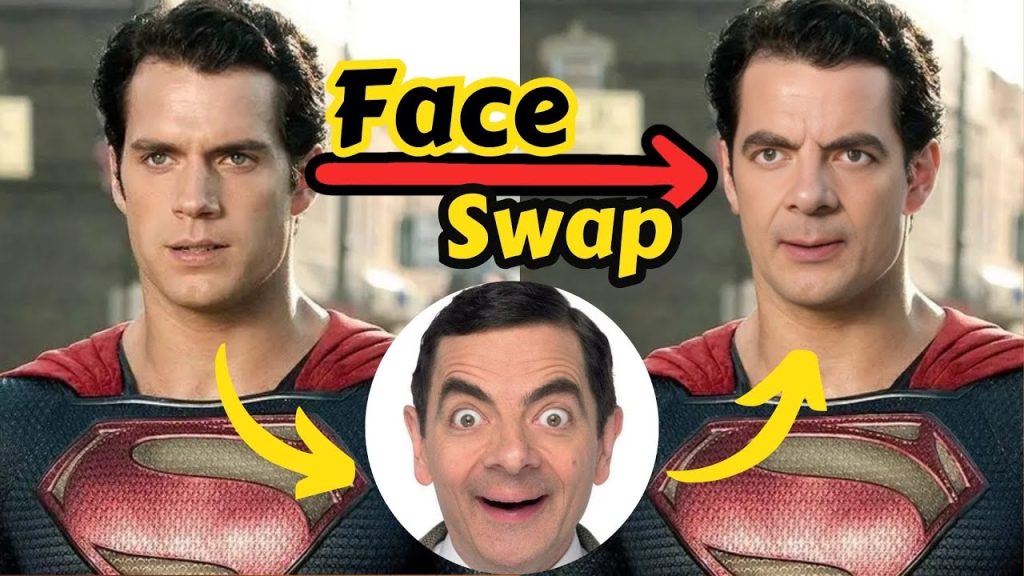

AI replaced the face of Henry Cavill with that of Mr Bean.

Taking face-swapping technology as an example, the purpose of face replacement is to replace the source face with the target face to generate a fake face that is indistinguishable from the human eye. When this technology was first born, people used it as a means of entertainment. And it’s now becoming more and more welcomed by the film and art industries, producing high commercial value. deepfake technology has made it possible to replace the identity of the original face with that of the target face, and it has become easy to alter the appearance of the given face image subjectively.

In such cases, the inputs to recognition models may not always be genuine, leading to potential deception. Increasingly, these techniques are being exploited by criminals to create fake videos of specific political figures or famous celebrities for illicit political or commercial purposes, raising concerns about online privacy and security.(image)

AI Nudify Service

“The criminal is the creative artist; the detective only the critic.”- G.K.Chesterton.

The privacy and security of regular users are genuinely harmed when a celebrity has already been the target of a crime. According to Indiana Jones of insecure systems, Jeremiah Fowler discovered a cache of sexually explicit AI-generated images that were posted online, as well as an illegal system that included 93,485 images and JSON files that recorded user prompts and provided links to the images produced from these inputs. This image is a collage of screenshots showing an example of fully clothed AI-generated images of what appears to be very young girls. It is not known if these images reflect the faces of real children or are completely AI-generated.

As reported by Fowler, the system he discovered and the files it held belonged to the AI company AI-NOMIS and its web software GenNomis. Fowler describes GenNomis is a “nude service” that employs artificial intelligence (AI) to swap facial photos or digitally undress subjects, frequently without their agreement, to make them appear nude or in a pornographic setting. Since today’s AI systems process photos using highly advanced technology, the resulting images are frequently realistic which makes the accidental victim’s humiliation and pain even worse.(Jessica, 2025)

numerous AI image generators offering to create pornographic images

The websites of GenNomis and AI-NOMIS had gone down after Fowler’s efforts, but his research indicates that “users and tech companies are already abusing artificial intelligence, and it’s so bad that AI can be used to put people in the realm of pornography.” There will be severe consequences if the pictures are revealed.

Means of dealing with AI criminal behaviour

The good news is that law enforcement agencies around the world are waking up to the threat that AI-generated content poses in terms of child abuse material and criminal activity. In early March 2025, the Australian Federal Police arrested two men as part of an international law enforcement operation led by Danish authorities. The operation, dubbed ‘Operation Cumberland’ and involving Europol and law enforcement agencies from 18 other countries, resulted in the arrest of 23 other suspects. All individuals face charges related to the alleged creation and distribution of AI-generated child sexual abuse material.(AFP,2025)

“In Australia, it is a criminal offence to create, possess or share content that depicts the abuse of someone aged under 18; it is child abuse material irrespective of whether it is ‘real’ or not.”

The Cairns man was charged with:

Four counts of possessing child abuse material accessed or obtained using a carriage service, contrary to section 474.22A of the Criminal Code (Cth).

The Toukley man was charged with:

One count of possessing child abuse material accessed or obtained using a carriage service, contrary to section 474.22A of the Criminal Code (Cth);

One count of using a carriage service to access child abuse material, contrary to section 474.22 of the Criminal Code (Cth).

The maximum penalty for each of these offences is 15 years’ imprisonment.

The Other Side of the Concern

The harm done to victims is irreparable, even if the government swiftly takes action against those who use AI to broadcast fake information in order to commit crimes. Sadly, there have been numerous cases where individuals and young people have taken their own lives over sextortion attempts on the Internet.

Ryan Last, the 17-year-old straight A student and scout, committed suicide.

‘Someone pretending to be a girl contacted him, they started talking, the online conversation quickly became intimate, and then it turned into a crime,’ said his mother Pauline Stewart. The scammer – posing as a young girl – sent Ryan a nude photo and then demanded that Ryan share a sexually explicit photo of himself in return. After Ryan shared his intimate photo, the cybercriminal immediately demanded $5,000, threatening to publish the photo and send it to Ryan’s family and friends.(CNN,2022)

Ryan Last and his mother, Pauline Stuart.

Young people who had experienced child sexual abuse had a suicide rate that was 10.7 to 13.0 times the national Australian rates. 32% of the abused children had attempted suicide, and 43% had thought about suicide since they were sexually abused. (Plunkett,2001)

Like the suicidal, the young people who have attempted suicide also deserve attention. Young people often experience serious damage to their family environment and their parents’ reactions to them. These reactions include anger at the victim after they have been treated violently, a lack of anger at the perpetrator and denial that abuse has occurred. This shows that if parents are absent or respond in an unsupportive way, the chances of a child or young person resolving such experiences of cyberbullying may be limited.

‘Teenagers‘ brains are still developing,’ says Dr Scott Hadland, director of adolescent medicine at Massachusetts General Hospital (Mass General) in Boston. ‘So when something catastrophic happens, such as personal photos being posted online for people to see, it’s hard for them to get past that moment.

AI as a digital guardian

Although children are more susceptible to online predators using chat and other methods due to the Internet’s rapid expansion, AI technology also helps safeguard children’s physical and emotional well-being.

In a world where children’s digital devices are as common as toys, parents have previously unheard-of difficulties navigating the complicated realm of online safety. Technology pioneer Yuri Divonos gave insights into innovative ways to assist families in resolving these issues at the Qatar Cyber Summit.(Steve,2025)

Most parental control apps simply restrict access or limit screen time without distinguishing between beneficial and harmful online activities. While many countries are implementing regulations regarding children’s screen time, a more nuanced approach is necessary.

Controlling the amount of time spent online is certainly rural, however, the quality of the screen time spent is much more important. Not all screen time is equal, some children use their devices for creative purposes and learning, while others may just ‘play games or mindlessly scroll all day,’

But currently, ‘social media algorithms work in such a way that if you show an interest in certain content, they serve you more of it, suddenly, you are bombarded with potentially harmful content that can seriously affect your well-being.’ Aura’s approach is fundamentally different. The technology essentially works like an AI agent that constantly monitors a child’s digital behaviour patterns, creating a personalized baseline for each user. When anomalies appear, the system can alert parents.

Divonos shared insights from test families already using the product: “We analyze data from the child’s device and provide parents with meaningful observations like, ’Your child shows a strong interest in journaling and might be interested in pursuing journalism as a career. They’ve been expressing confidence in their messages lately, but we noticed they experienced significant stress on this particular day.’”

Who is responsible?

We are very happy to see cutting-edge technology companies designing user-friendly AI systems, but when it comes to rule-making, I think AI providers should have safeguards in place to prevent abuse. Developers should implement a range of detection systems to flag and block attempts to generate explicitly deepfaked content, especially when images of underage children or individuals without consent are involved.

AI providers that allow users to anonymously upload and generate images without any type of authentication or watermarking technology by default are providing an open invitation for abuse.

It feels like we are in the Wild West when it comes to regulating AI-generated images and content, and stronger detection mechanisms and strict verification requirements are essential. While it should become easier to identify perpetrators and hold them accountable for the content they create, service providers should also be able to remove harmful content quickly. But my advice to any AI service provider is to first understand what users are doing, and then limit what they can do when it comes to illegal or questionable content. I also recommend that providers establish a system to remove potentially infringing content from their servers or storage networks.

Conclusion

“Every age has its own challenges, and these are the challenges that children will face all over the world.”

Earlier this year, the UK government promised to make it a criminal offence to produce and share deeply fake images with sexual connotations.

In the US, the bipartisan Take It Down Act aims to make it a criminal offence to post non-consensual sexually exploitative images, including AI-generated deep fakes, and requires platforms to remove such images within 48 hours of notification. The legislation has passed the Senate and is awaiting consideration by the House of Representatives.

At the end of 2024, some of the largest US technology companies – including Adobe, Anthropic, Cohere, Microsoft, OpenAI and the open-source web data repository Common Crawl – signed a non-binding pledge to prevent their AI products from being used to generate non-consensual deepfake pornography and child sexual abuse material.

As long as there is a demand for this sickening content, there will be scumbags willing to let users create it and spread it through websites. The main purpose of this article is to highlight the negative impact of online sexual exploitation on digital audiences, especially children, including the harm to mental health and physical safety, with the help of AI face-swapping technology. Based on the challenges, governance measures are proposed from multiple perspectives. Through the joint efforts of governments, technology companies and social media service operators, respect, civility and inclusiveness in the digital space can be promoted, while achieving the goals of combating online harm and protecting the digital environment.

References

Antoniewicz, S. (2025, March 24). AI social media guardians: the new frontier in protecting kids online. The Drum. https://www.thedrum.com/news/2025/03/24/ai-social-media-guardians-the-new-frontier-protecting-kids-online

CNN, J. C. and J. K. (n.d.). A 17-year-old boy died by suicide hours after being scammed. The FBI says it’s part of a troubling increase in “sextortion” cases. CNN. https://edition.cnn.com/2022/05/20/us/ryan-last-suicide-sextortion-california/index.html

Huang, B., Ma, J., Wang, G., & Wang, H. (2025). HDL: Hybrid and Dynamic Learning for Fake Face Recognition. IEEE Transactions on Artificial Intelligence, 1–10. https://doi.org/10.1109/tai.2025.3537963

Jolly, N. (2025, April). AI must be regulated for kids along with social media. Mumbrella. https://mumbrella.com.au/are-friends-electric-ai-regulation-for-children-869502

Li, L., Mu, X., Li, S., & Peng, H. (2020). A Review of Face Recognition Technology. IEEE Access, 8, 139110–139120. https://doi.org/10.1109/access.2020.3011028

Lyons, J. (2025). MSN. Msn.com. https://www.msn.com/en-us/news/technology/generative-ai-app-goes-dark-after-child-like-deepfakes-found-in-open-s3-bucket/ar-AA1C2lXi?ocid=BingNewsVerp

Operation Cumberland – Australian duo charged as part of global investigation into AI-generated child abuse material | Australian Federal Police. (2025, February 28). Afp.gov.au. https://www.afp.gov.au/news-centre/media-release/operation-cumberland-australian-duo-charged-part-global-investigation-ai

Wiecha, J. L., Sobol, A. M., Peterson, K. E., & Gortmaker, S. L. (2001). Household Television Access: Associations With Screen Time, Reading, and Homework Among Youth. Ambulatory Pediatrics, 1(5), 244–251. https://doi.org/10.1367/1539-4409(2001)0012.0.CO;2

Be the first to comment