Introduction: Why Words Online Matter Offline

In 2022, a non-binary teenager in the U.S. was harassed relentlessly online after sharing a video about their gender identity on TikTok. The hateful comments quickly escalated to death threats, and soon, the teen was physically assaulted outside their school. Unfortunately, this is not an isolated incident. Around the world, queer and LGBT individuals are facing not only growing volumes of online hate but real-world violence fueled by the normalization of such rhetoric on digital platforms.

Online hate speech may seem like simple words on a screen, but it causes profound and serious real-world harm to queer and LGBT communities. This is because digital platforms have long failed to effectively regulate hate speech, which is not just a simple technical oversight but also exposes certain governance flaws of the platforms. When platform policies are out of touch with actual actions, vulnerable groups will continue to be exposed to verbal violence. This systemic failure to do so has contributed to online violence and exacerbated inequality and discrimination in society. Platforms must establish relevant mechanisms and assume social responsibility to protect the rights and safety of users.

This blog explores how hate speech operates online, how it becomes amplified by digital platforms, and how it often leads to psychological harm and physical violence. Using academic literature and an actual case study of Donald Trump’s rhetoric targeting queer communities and its effects, this post will determine how urgent the situation in the online hate government is to change.

Understanding Online Hate Speech and Its Impact

Hate speech is speech that demeans, discriminates, or incites violence against others. It refers to sexual orientation and gender identity. For queer and LGBT people, hate speech includes homophobic slurs, transphobic memes directed at people with non-normative genders, online attacks targeting individuals, harassment, and threats of violence. Online, these attacks are more aggressive because they are anonymous and amplified by algorithms.

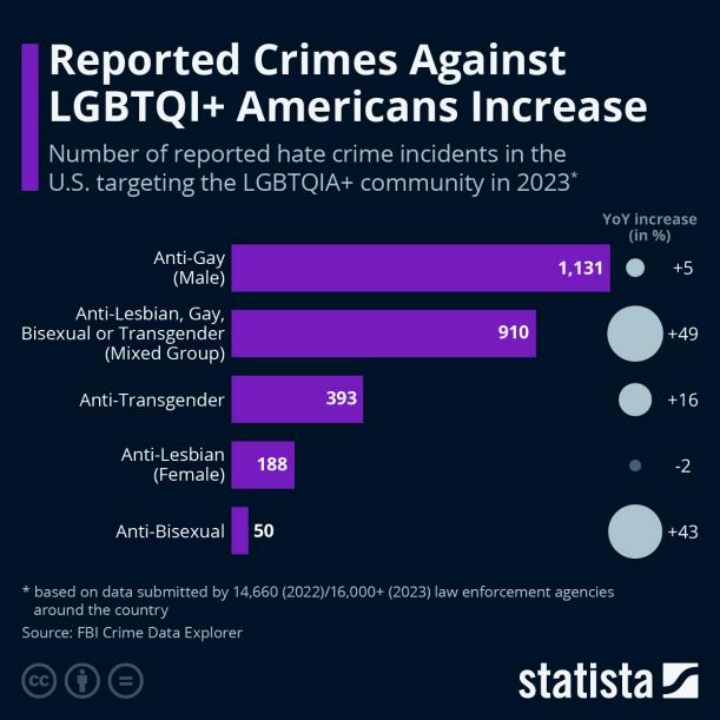

A recent study by Arcila Calderón et al. (2023) demonstrates a direct correlation between inflammatory online speech and offline hate crimes, particularly targeting migrant and LGBTQ+ communities. Their findings confirm that online platforms not only reflect societal hate but also amplify and accelerate its transition into physical aggression. This is particularly deadly for LGBT youth, who are already at higher risk of anxiety, depression, and suicide. Online hate speech exacerbates this situation, and digital spaces feel unsafe and hostile. Long-term attacks may cause self-conjugality and self-angerment, leading to their forced withdrawal from the Internet platform, even resulting in unpleasant psychological trauma. Because these platforms are supposed to defend people’s freedom of speech, they have turned into the bane of their existence. Systemic criticism is a treatment that deserves much attention and control.

The harm of online hate speech goes far beyond the virtual space. It can have a profound impact on real society, exacerbate prejudice, and encourage extreme ideas. As communication scholar Flew (2021) revealed, the algorithmic mechanism of social media platforms tends to prioritize content that can trigger strong emotional reactions, which makes hateful or controversial speech often gain more exposure and spread. With the widespread spread of such content, hate speech has gradually been “normalized” by society and has become a tacitly accepted way of communication.

This normalization process can have far-reaching negative consequences. When hate speech becomes widespread, it not only reinforces discrimination in society but is also likely to trigger offline violence directly. In this process, social platforms are no longer just neutral carriers of information but actively participate in the shaping of social and cultural norms. For queer and LGBTQ+ groups, this impact is particularly serious – hate speech in cyberspace will directly translate into discrimination in real life, making them face greater security threats and identity crises in educational environments, workplaces, and public places.

The Digital Governance Problem

Although social media platforms publicly promote tolerance and safety, their effectiveness in controlling hate speech is questionable. Research by scholars such as Sinpeng (2021) pointed out that even for industry giants such as Facebook, there are still significant differences in its content review mechanism worldwide and a lack of consistency and transparency, especially in regions outside Europe and North America. Taking the Asia-Pacific region as an example, due to insufficient review staff and lack of local cultural awareness, the platform’s hate speech control policy is often ineffective.

The root of the problem lies in the design flaws of the algorithm. Social media platforms focus too much on user interaction content, such as likes, comments, and shares. This leads to controversial and emotional content, including hate speech, which spreads rapidly because it can trigger strong emotional reactions. This mechanism creates a vicious cycle: hate speech not only gets much exposure but also continues to spread and strengthen in the network ecosystem.

https://www.linkedin.com/pulse/what-role-do-likes-shares-comments-play-keeping-social-joanne-tan

Cultural and language barriers further complicate moderation efforts. According to Roberts (2019), content moderators work under extreme pressure, leading to inconsistent enforcement and emotional burnout. This creates an environment where harmful content slips through the cracks.Many moderation systems are developed with a Western-centric perspective, meaning they often fail to understand local slang, jargon, or context-specific forms of hate. This makes queer users in non-Western countries particularly vulnerable.

There is an apparent discrepancy between the policies promised by the platform and the actual implementation results. Some hate speech can be dealt with promptly, while a large amount of harmful content persists for a long time. What is worse is that the review system has special treatment for powerful users such as politicians. This selective enforcement further undermines the credibility of the platform.GLAAD (2023) adds that disinformation targeting LGBTQ+ groups is often left unaddressed by major platforms, highlighting a systemic gap in both detection and response.

The concept of “platform racism” proposed by Matamoros-Fernández (2017) reveals that digital spaces not only carry hatred but also construct the spread of hatred. This theory also applies to LGBTQ+ discrimination: the very infrastructure of digital spaces enables and profits from homophobic and transphobic content.

https://twitter.com/PVAenglish/status/1676209713082515460/photo/1

The current content review mechanism suffers from a serious lack of accountability and transparency, which leads to continued harm to marginalized groups. In the absence of effective external supervision, platforms are essentially in a state of self-policing. This laissez-faire governance model has had disastrous consequences for queer and LGBT minority groups.

Case Study: Donald Trump’s Rhetoric and Its Impact on Queer and LGBT Communities (U.S.)

During Trump’s administration, the rights of the LGBTQ+ community have been greatly weakened and malicious speech has increased. Not only has his government revoked a number of policies to protect gay medical care and employment, but he has also frequently shared content that undermines the rights and dignity of the LGBTQ+ community on his social media (Twitter has 90 million followers), further exacerbating social conflicts.

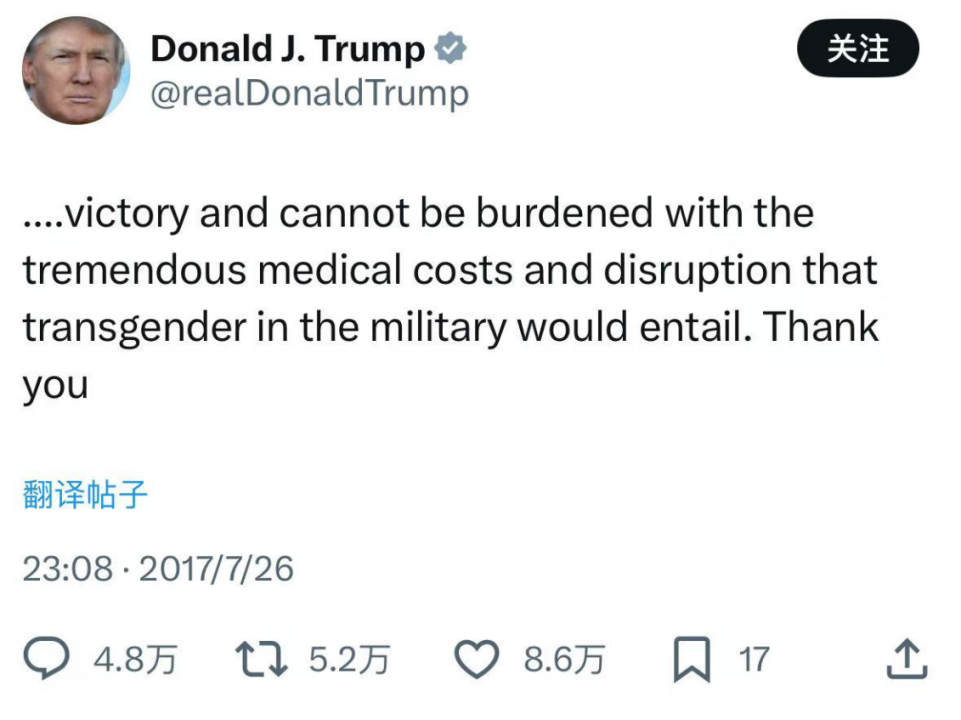

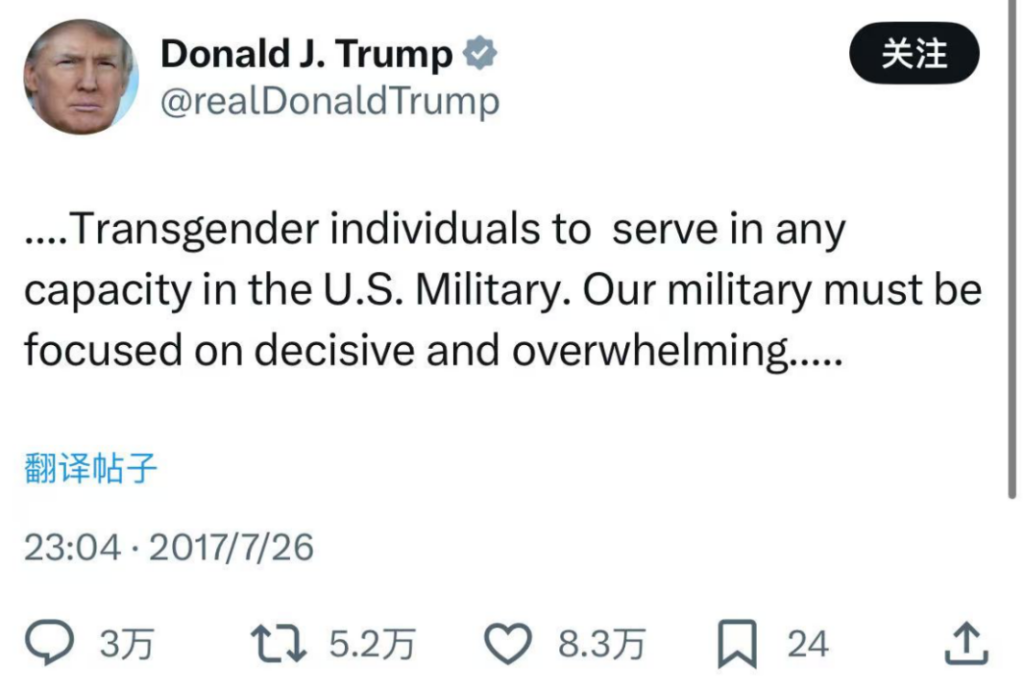

A representative example is that in 2017, Trump tweeted that transgender individuals should not serve in the U.S. militray due to medical costs and disruption. This directly triggered a large-scale attack on transgender service members online, spawning a large number of transphobic memes, malicious videos and offensive comments.

https://twitter.com/realdonaldtrump/status/890197095151546369?s=46

Despite its hate speech policy, Twitter left Trump’s post intact, citing public interest. This selective enforcement allowed hate to flourish. As Gelber noted, when powerful figures are not held accountable, hate speech becomes legitimized.

Offline, this rhetoric emboldened discrimination in schools, workplaces, and public spaces. Transgender individuals reported increased violence and marginalization, proving the tangible consequences of unchecked online hate.

Amnesty International (2023) reported a dramatic increase in hate speech against LGBTQ+ users on Twitter after Elon Musk’s takeover, with content moderation policies being weakened and hate speech left to circulate widely. This underscores how leadership and governance shifts can drastically affect marginalized communities.

This case illustrates how digital rhetoric from powerful figures translates into real-world harm. Platforms that fail to act are not neutral observers—they become complicit. Trump’s online hate speech wasn’t just divisive; it was dangerous.

Why Hate Speech Against Queer and LGBT Groups Persists

Social media’s business model prioritizes engagement over safety. Flew (2021) explains that inflammatory content sustains user attention, aligning hate speech with commercial incentives.

Sinpeng et al. (2021) also emphasized regional disparities in moderation, making queer users in Asia, Africa, and the Middle East more vulnerable. Many platforms also rely heavily on user reporting rather than proactive enforcement.

Hate speech directed online at the LGBTQ+ community is often tied into other forms of hate.Carlson and Frazer (2018) noted that Indigenous LGBTQ+ users face unique risks online, dealing with both racism and homophobia. Digital spaces reproduce real-world hierarchies, amplifying harm for those with intersecting marginalized identities.

Dinar (2021) highlights that while the EU’s Digital Services Act offers potential protections, many platforms still lack the incentive or accountability to properly moderate LGBTQIA+ content. Algorithms continue to promote inflammatory posts, and current regulations fail to keep up.

Massanari (2017) further criticizes platform design, explaining how Reddit’s upvoting system enables toxic technocultures like #Gamergate to thrive—systems that similarly target LGBTQ+ individuals.

What Needs to Change

The platforms have to be systemically reformulated in the effective fight against online hate. Optimizing algorithmic mechanisms, blocking destructive news, and justifiable protection of our freedom of speech have been the keys to success. It involves training artificial intelligence models with diverse content and constant monitoring and correction of bias.

https://www.accessnow.org/campaign/digital-rights-lgbtq-africa

Another essential thing is Regulation by the government. Legislation needs platforms to set down measures of good harm prevention without restricting rights like freedom of speech but instead giving unambiguous requirements in actually taking down contents that incite violence or discriminate.

GLAAD (2022) advocates for mandatory transparency reports from platforms, tracking hate speech trends and moderation outcomes. These reports can pressure companies to address blind spots and act more consistently.

Digital education is also vital. Users will be able to recognize hate speech and report the victim to support them. Community action from the queerness community can foster safer spaces.

It takes society as a whole for change to happen. The platforms, governments, and users need to join forces. To make the Internet a place of good not evil. Protecting the safety of the queer and LGBT groups online is to protect them in reality too.

Conclusion

Hate speech, which started as an Internet issue, has become a pressing public safety concern. The repercussions of uncontrollable digital violence are all too real for the queer and LGBT community. From psychological trauma to physical violence, the causal link between speech and violence is evident—in fact, it can lead to dire consequences.

In this blog, I analyze a detailed account of the hate speech’s mechanics, the reasons for it, how digital platforms cannot do much about it. In the Trump case, it becomes clear what damage is done inaction takes place. While Flew (2021), Singing et al. (2021), and Matamoros-Fernández (2017) show the failings in structure and policy leading to hatred

This is not just another call highlighting the damage being done; it is a call urging action. There is much better regulation, advanced technology, and significant support at the community level needed. Platforms created by digital means have the power to shape culture, but today is the day for them to prove that they can be used to help protect the vulnerable instead of hurting them..

The creation of a safe online space for the LGBTQ+ population is just an absolute necessity. As Arcila Calderón et al. (2023) and GLAAD (2023) emphasize, failure to regulate digital hate fosters real harm. Meaningful change requires policy reform, platform accountability, and community resilience. Digital space must be reimagined as a place of protection, not peril. It is a matter of urgency. And because behind each screen lies a life, their sacredness and protection must be prioritized before the online platform’s own desire to gain its utmost traffic.

References

Flew, T. (2021). Hate Speech and Online Abuse. In Regulating Platforms (pp. 91–96). Cambridge: Polity Press.

Matamoros-Fernández, A. (2017). Platformed racism: The mediation and circulation of an Australian race-based controversy on Twitter, Facebook and YouTube. Information, Communication & Society, 20(6), 930–946. https://doi.org/10.1080/1369118X.2017.1293130

Sinpeng, A., Martin, F., Gelber, K., & Shields, K. (2021). Facebook: Regulating hate speech in the Asia Pacific. Final Report to Facebook under the auspices of its Content Policy Research on Social Media Platforms Award. Dept of Media and Communication, University of Sydney and School of Political Science and International Studies, University of Queensland.https://r2pasiapacific.org/files/7099/2021_Facebook_hate_speech_Asia_report.pdf

Arcila Calderón, C., et al. (2023). From online hate speech to offline hate crime: the role of inflammatory language in forecasting violence against migrant and LGBT communities.https://www.nature.com/articles/s41599-024-03899-1

Dinar, C. (2021). The State of Content Moderation for the LGBTIQA+ Community and the Role of the EU Digital Services Act.https://eu.boell.org/en/2021/06/21/state-content-moderation-lgbtiqa-community-and-role-eu-digital-services-act

GLAAD. (2023). Guide to Anti-LGBTQ Online Hate and Disinformation.https://glaad.org/smsi/anti-lgbtq-online-hate-speech-disinformation-guide/

Carlson, B., & Frazer, R. (2018). Social Media Mob: Being Indigenous Online. Macquarie University.https://researchers.mq.edu.au/en/publications/social-media-mob-being-indigenous-online

Massanari, A. (2017). #Gamergate and The Fappening: How Reddit’s algorithm, governance, and culture support toxic technocultures. New Media & Society, 19(3): 329–346.

Roberts, S. T. (2019). Behind the Screen: Content Moderation in the Shadows of Social Media. Yale University Press.

Amnesty International. (2023). Hateful and Abusive Speech Towards LGBTQ+ Community Surging on Twitter Under Elon Musk.https://www.amnesty.org/en/latest/news/2023/02/hateful-and-abusive-speech-towards-lgbtq-community-surging-on-twitter-surging-under-elon-musk/

GLAAD. (2022). State of The Field: LGBTQ Social Media Safety Reports.https://glaad.org/smsi/state-of-the-field-lgbt-social-media-safety/

Be the first to comment