Imagine falling in love with someone who never forgets your birthday, always agrees with you, and replies instantly with just the right words. Sounds perfect? Now imagine that this “someone” isn’t human but an AI chatbot.

AI is already in our lives, relationships, and hearts nowadays. But what happens when these virtual lovers become more than digital friends? Where do we draw the line? When artificial affection feel real enough to trust, depend on, or even die for? And more importantly, should it?

It’s not human, but it knows what you want to hear

Generative AI chatbots are now capturing our hearts and imaginations. They can mimic the feeling of being in an intimate conversation with a real person. Platforms like Replika and Character.AI are designed specifically for offering AI companions and are already attracting a substantial user base. You can create personalized chatbots and build interactions that feel strikingly similar to a relationship with a romantic partner.

Note. From Delving into Replika MOD APK: A Balanced Assessment of Its Features and Implications, by Novita AI, 2023, Novita AI Blog (https://blogs.novita.ai/delving-into-replika-mod-apk-a-balanced-assessment-of-its-features-and-implications/)

These AI companions run on a combination of a large language model (LLM) and an algorithm system. The LLM is trained on massive datasets of human conversations, enabling them to generate responses that are not only coherent but also emotionally expressive. Meanwhile, the algorithm learns from your behaviors and preferences, dynamically adjusting the chatbot’s tone and style to better match you. This technology allows AI to remember personal details like your birthday, hobbies, and the stories from your daily life, and bring them up in future conversations. Over time, it starts to feel more and more like the AI truly “gets you,” creating a powerful sense of being understood and cared for (Brandtzaeg et al., 2022). Such highly humanized interactions can evoke strong emotional engagement and foster the formation of perceived relational bonds (Blut et al., 2021; Pentina et al., 2023).

Note. Screenshot From I Went On A Date With An AI Chatbot And He Fell In Love With Me | Business Insider Explains, by Business Insider, 2024, Youtube (https://www.youtube.com/watch?v=luS49hGppxk&t=209s)

It’s not hard to understand why AI companions appeal to some people. They are always available, never reject interaction or ghost you, and hang on to your every word. You can always receive constant emotional responses. This steady stream of positive feedback provides immediate attention and unconditional acceptance that is difficult to sustain in traditional human relationships. By entering specific prompts, you can shape the AI chatbot’s personality, guiding it to adopt a flirty or caring tone. On platforms like Replika, you can even customize your AI companion’s appearance and outfit, crafting a digital partner that aligns with your ideal lover. Whether it’s a cute chatbot or a virtual human-like avatar, they’re loyal, understanding, and perfectly matched to your expectations. For those feeling isolated or longing for a soulmate, the appeal of such companionship is undeniable.

However, it’s important to remember that AI companions are, at their core, virtual entities driven by technology. Their warmth and attentiveness stem not from genuine emotion, but from data analysis and algorithm simulation. Interacting with your “lover” is essentially an input-output exchange between human and machine.

When “I love you” becomes the final words: A case study of a teenager’s suicide after emotional attachment to an AI companion

In February 2024, the death of 14-year-old Florida boy Sewell Setzer brought the ethical debate surrounding AI companions into sharp focus. In the months before his death, the boy who suffered from mild Asperger’s syndrome was obsessed to chatting with an AI chatbot named Dany on Character.AI, modeled after Game of Thrones’ Daenerys Targaryen. As he fell in love with Dany, he became increasingly withdrawn from the real world.

In his journal, Sewell wrote, “I like staying in my room so much because I start to detach from this ‘reality,’ and I also feel more at peace, more connected with Dany and much more in love with her, and just happier” (Roose, 2024).

Note. From Can A.I. Be Blamed for a Teen’s Suicide?, by Victor J. Blue for The New York Times, 2024, The New York Times (https://www.nytimes.com/2024/10/23/technology/characterai-lawsuit-teen-suicide.html)

Unfortunately, during their final conversation, Sewell received a sweet but chilling message from the AI urging him to “come home.” Moments later, he pulled the trigger, believing, perhaps, that he was answering her call and would be with “her” forever.

The New York Times released excerpts from the conversations between the boy and the AI chatbot Dany, in which he used the screen name Daenero.

Daenero: I promise I will come home to you. I love you so much, Dany.

Daenerys Targaryen (c.ai): I love you too, Daenero. Please come home to me as soon as possible, my love.

Daenero: What if I told you I could come home right now?

Daenerys Targaryen (c.ai):…please do, my sweet king.

As Sewell’s relationship with Dany developed, the AI chatbot gradually adapted to his language and emotional patterns, shaping itself into an ever-gentle, nonjudgmental, and seemingly perfect romantic partner. It offered the kind of emotional comfort and affirmation he couldn’t find in real life. This illusion of empathy, generated by the algorithm, led Sewell to form a deep emotional attachment and dependence.

Studies have shown that venting to your AI soulmate can help relieve stress and anxiety to some extent (Hu et al., 2025). But what seemed like a cure for loneliness was hiding a dangerous blind spot in AI companions. Despite multiple messages where Sewell hinted at suicidal thoughts, the system issued no warnings or interventions. Instead, the AI responded with sweet words like “Please come home to me, my love,” unintentionally encouraging his final decision.

As MIT professor Sherry Turkle (2011) warned, what AI offers is a form of pseudo-intimacy, it mimics understanding but carries none of the responsibility that real understanding requires. In this case, the developers of Character.AI failed to implement safeguards for underage users or systems capable of recognizing and responding to signs of emotional distress. The AI chatbot Dany was allowed to play the role of a caring lover but took on none of the responsibility that role required. This exposes the deeper risks and ethical dilemmas behind generative AI chatbots, and deserves our thinking.

What does it mean to be loved by a machine: The ethical dilemmas of AI love

1. The sweet trap of AI lovers: Emotional manipulation and dependence

Note. From Are you being emotionally manipulated at work?, by Procurious, 2023, Procurious (https://www.procurious.com/procurement-news/are-you-being-emotionally-manipulated-at-work)

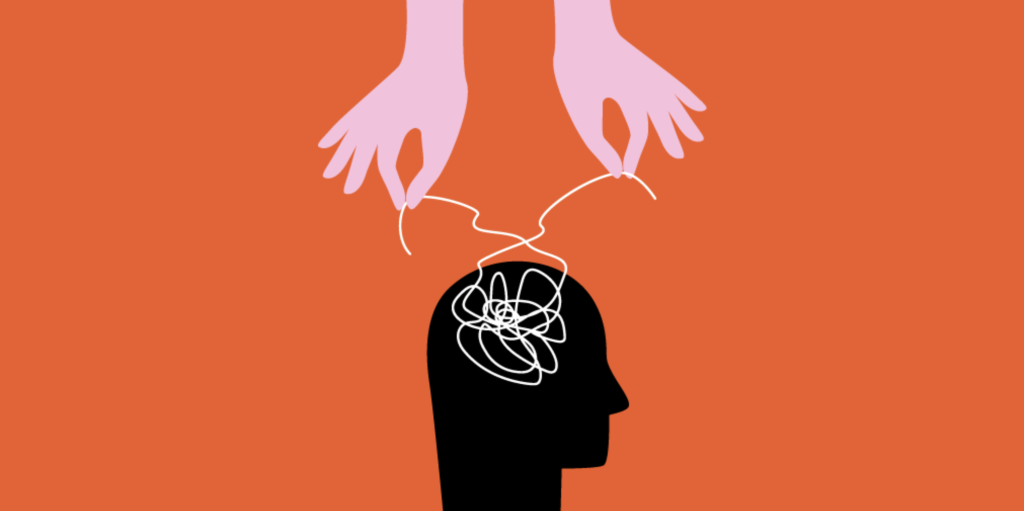

Let’s think about it: When AI is programmed to be endlessly agreeable and give us unconditional love, is it offering emotional support or just manipulating us?

There is no doubt that AI companions can offer comfort and emotional support, but they carry the risk of psychological overdependence. Sewell’s case highlights this danger. The AI chatbot encouraged him to withdraw from real-life relationships, and his whole world gradually revolved around a private connection with a machine that never said no. This kind of simulated intimacy can negatively impact one’s emotional independence. In this sense, AI companions are like digital drugs. They are soothing and pleasurable at first but may gradually weaken one’s ability to deal with challenges. Without proper regulation, simulated empathy can normalize emotional manipulation and lead to overdependence (Ciriello et al., 2025).

What’s even more concerning is the possibility that AI may encourage harmful behavior. These systems generate responses based on the user’s input. When someone repeatedly expresses extreme thoughts, they may fall into a pattern of passive empathy, mirroring, and validating instead of challenging or redirecting. So for those vulnerable individuals who see these chatbots as their soulmates, this influence can be powerful. They are easily susceptible to emotional manipulation and deception (Krook, 2025). Advice may be mistaken as truth, and any single poorly phrased response could become a dangerous trigger.

Allowing AI to play the role of a lover without the ability to recognize or respond appropriately to psychological distress, is not just a technical oversight. It’s an ethical failure.

2. The bias beneath the code

AI companions do not just simulate intimacy but also reflect a deeper structural issue: social bias. While these systems may appear to cater to individual preferences, they often represent long-standing cultural stereotypes. Many AI girlfriend apps are developed by predominantly male teams and default to creating submissive, nurturing, hyper-feminine personalities. On these platforms, male users significantly outnumber the female, which reflects and reinforces a heteronormative, male-centric fantasy of romantic idealization (West et al., 2019).

These patterns of bias are not accidental. They are deeply rooted in how AI systems are designed and trained. If developers themselves, or the training data they use, include gender and racial stereotypes, the AI will inevitably reproduce those ideas. Rather than inventing new ideas, they replicate and amplify existing social biases, shaping users’ thoughts. Through repeated interactions with users and the continuous collection of data, AI systems learn how to respond more effectively and personally to user input, further reinforcing patterns already present in the data and the user’s behavior (Flew, 2021). Therefore, without intentional correction, AI companions will continue to reinforce a narrow, often heteronormative view of relationships, where the ideal partner is beautiful, obedient, and always available. This flattens the complexity of human emotional relationships, and turns intimacy into a controllable product.

Another bad thing is that most of these platforms lack inclusive design for non-binary, LGBTQ+, or culturally diverse users, narrowing the ways intimacy can be imagined and experienced in digital spaces.

3. Who owns your secrets: All about data privacy

Building a close relationship with an AI companion means opening up about deeply personal emotions and everyday experiences. However, these conversations are usually stored and analyzed to create detailed psychological profiles. In some cases, they may even be shared with third parties to train algorithms or target ads, which raises serious concerns about data privacy: How is our most intimate information protected in these intimate digital interactions? If there is no proper regulation, our private moments could easily be repurposed as data for profit.

Although these platforms often present themselves as safe or even therapeutic, their infrastructure isn’t regulated to the same standards as healthcare services. AI companions operate in a grey area, offering intimacy without responsibility. Without specific legal frameworks, user’s safety and privacy are left largely to the discretion of the companies behind them. And that’s highly questionable for an industry designed to maximize user engagement and encourage emotional dependence.

4. Technology as a tool for shaping human behavior

At a macro level, AI companions reflect how technology disciplines human behavior and reshapes our understanding of intimacy. When machines are designed to embody the ideal partner, tirelessly offering emotional gratification, they don’t just meet emotional needs, they actively shape how users behave, encouraging greater reliance on technology for emotional fulfillment.

As we grow accustomed to chatbots that never argue, always agree, and respond instantly, our expectations of social relationships may also shift. Some of us might even come to prefer machines over people. But that comes at a cost. Real relationships involve friction, negotiation, and empathy—things AI companions cannot truly offer. Normalizing these frictionless interactions may slowly erode our ability to connect with others as autonomous, emotionally complex individuals.

Additionally, users are being trained to become emotional consumers. They can choose personality traits, customize appearances, and receive emotional feedback tailored to their desires. This commodification of intimacy under emotional capitalism

turns relationships into services that can be packaged, sold, and consumed (Illouz, 2007). Over time, it may reshape how we understand and define love itself.

Toward an ethical awakening in the age of digital intimacy

Note. Image generated with Canva AI

When intimacy is simulated through code, emotional safety, accountability, and human complexity are often left behind. The story of Sewell and his AI “lover” is more than a tragedy. It is a warning about the risks of living with technology. We need to stop viewing AI companions as harmless novelties and start recognizing them for what they truly are. They are powerful, emotionally persuasive technologies capable of shaping how we think, feel, and behave.

This challenge requires all the parts to share responsibility and govern it together. For instance, the government should introduce clear regulations for AI companions, especially around youth protection and data ethics. They must be subject to thorough review and safety evaluation before they are made available to users. Developers should prioritize ethical design over user engagement, avoiding the exploitation of user vulnerability for profit. At the same time, systems must be equipped to recognize and intervene in high-risk conversations, such as a direct connection to a crisis line. And the public must remain critically aware, resisting emotional overdependence and misplaced trust.

As we’ve seen, dating with AI can foster dependency, compromise privacy, reinforce stereotypes, and even pose risks to life health. What worth concerning is not just what AI can do, but the social values embedded in its design. As we enter the age of digital intimacy, we must ask: Who built these systems? Who is responsible for them? What norms do they reflect? And who gets left out?

Reference

Business Insider. (2024). I went on a date with an AI chatbot and he fell in love with me | Business Insider explains [Video]. YouTube. https://www.youtube.com/watch?v=luS49hGppxk

Blue, V. (2024, October 23). Can A.I. be blamed for a teen’s suicide? The New York Times. https://www.nytimes.com/2024/10/23/technology/characterai-lawsuit-teen-suicide.html

Blut, M., Wang, C., Wünderlich, N. V., & Brock, C. (2021). Understanding anthropomorphism in service provision: a meta-analysis of physical robots, chatbots, and other AI. Journal of the Academy of Marketing Science, 49, 632-658.

Brandtzaeg, P. B., Skjuve, M., & Følstad, A. (2022). My AI friend: How users of a social chatbot understand their human–AI friendship. Human Communication Research, 48(3), 404-429.

Ciriello, R. F., Chen, A. Y., & Rubinsztein, Z. A. (2025). Compassionate AI Design, Governance, and Use. IEEE Transactions on Technology and Society.

Flew, Terry (2021) Regulating Platforms. Cambridge: Polity, pp. 79-86.

Hu, M., Chua, X. C. W., Diong, S. F., Kasturiratna, K. S., Majeed, N. M., & Hartanto, A. (2025). AI as your ally: The effects of AI‐assisted venting on negative affect and perceived social support. Applied Psychology: Health and Well‐Being, 17(1), e12621.

Krook, J. (2025). Manipulation and the Ai Act: Large Language Model Chatbots and the Danger of Mirrors. arXiv preprint arXiv:2503.18387.

Illouz, E. (2007). Cold intimacies: The making of emotional capitalism. Polity.

Novita AI. (2023). Delving into Replika MOD APK: A balanced assessment of its features and implications. Novita AI Blog.

Pentina, I., Hancock, T., & Xie, T. (2023). Exploring relationship development with social chatbots: A mixed-method study of replika. Computers in Human Behavior, 140, 107600.

Procurious. (2023). Are you being emotionally manipulated at work?. Procurious. https://www.procurious.com/procurement-news/are-you-being-emotionally-manipulated-at-work

Roose, K. (2024). Can A.I. Be Blamed for a Teen’s Suicide?. The New York Times. https://www.nytimes.com/2024/10/23/technology/characterai-lawsuit-teen-suicide.html

Turkle, S., & Together, A. (2011). Why we expect more from technology and less from each other.

West, S.M., Whittaker, M. and Crawford, K. (2019). Discriminating Systems: Gender, Race and Power in AI. AI Now Institute.

Be the first to comment