Introduction

Recently, on China’s TikTok platform, a phenomenon has been published and reposted by many “we media”. Netizens found that under the video of a couple arguing, the winds of the comment sections of male and female users were completely opposite, showing completely different opinions. The hot comments in the comment section of male users were from the male perspective and represented the male position, while the hot comments in the comment section of female users were in support of women’s position. The platform’s algorithms control the ranking and recommendation of the content seen by various users based on the user’s gender, age and other information. Algorithms have formed different treatment among different groups, which we can call algorithmic bias. Without knowing the operation of the black box of algorithms, various groups are affected by algorithmic bias, so wrapping people in a “filter bubble” of views that are nearly the same as their own, which may lead to the situation that people only obtain information that confirms their views or communicate with like-minded people (N Just, M Latzer, 2017). As a result, groups are separated from each other, and the aggregation and deepening of identification with some of the negative views may also exacerbate social inequality and affect the rights and interests of the affected groups.

So why are algorithms biased, and how do they create “filter bubbles” or information cocoons, and how do these divide us? In this post, I will discuss the complexity of algorithmic bias and filter bubbles and see their impact on online discourse and society. This blog might give you something to think about.

Get to know the algorithmic bias

What is algorithmic bias? The topic of algorithmic bias has received a lot of attention in academia. A number of scholars have made their own definitions of it, but in a general and broad definition, algorithmic bias refers to systematic errors in the decision-making process in computer algorithms, machine learning, and other models due to factors such as algorithm design, databases, or application scenarios, which can lead to bias or discrimination against certain groups or characteristics.

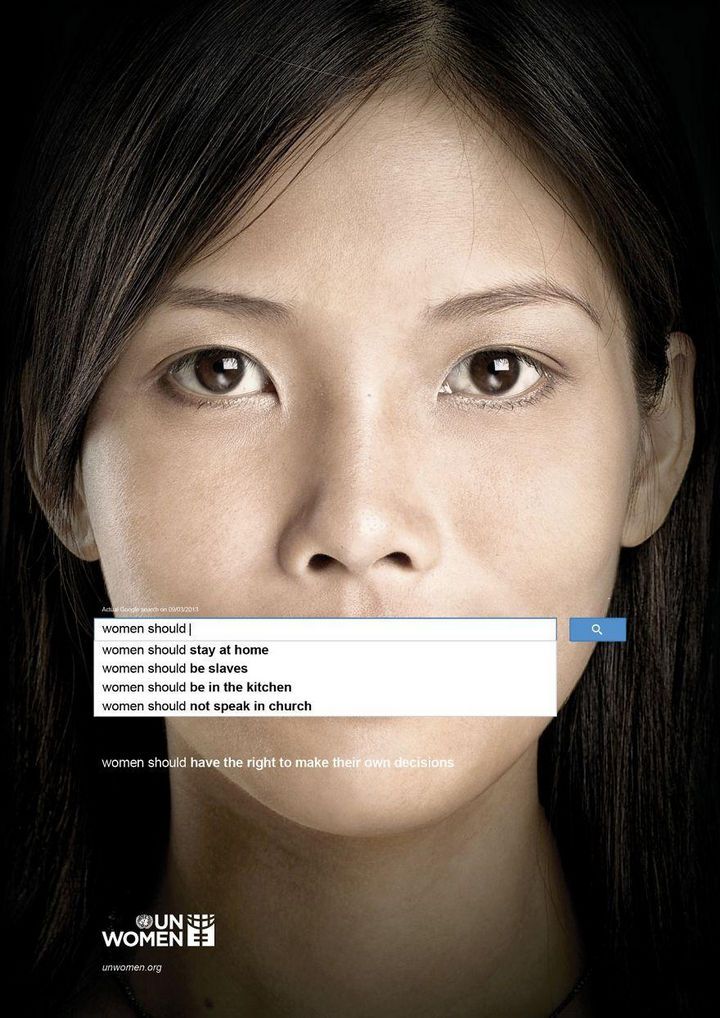

We can’t live without algorithms, so algorithmic bias is everywhere. Take searching engine as an example, Internet search engine has become an indispensable tool for people’s information retrieval in the digital age. With its rich knowledge reserve, huge database and fast cloud computing capabilities, it has become another brain that helps humans in doing information technology. Due to the algorithm mechanism acts on the content recommendation and content filtering of search engines, search engines often bring bias or discrimination information when performing information completion. For example, SU Noble found that in the index of trillions of web pages crawled by Google search, searches for “Black girl, “”Black Booty on the Beach,” and “Sugary Black Pussy “appear on the first page of Google search results (SU Noble, 2018). In addition, when searching for ‘women need’ and ‘women should’ in the search engine, the relevant complementary entry is ‘women should [stay in the kitchen]’. In response to this highly discriminatory phenomenon, UN Women has also launched an anti-discrimination campaign (UN women, 2013).

When one only needs to enter the first few letters or words in a search engine, the search bar already presents all the words that can be associated with it in the form of a drop-down box. However, those words with tendencies or discrimination will directly enter people’s vision without any defense, and these discriminatory or prejudicial messages may lead people to strengthen stereotypes and prejudices, and exacerbate the separation between groups. Of course, this reflects the algorithms that shape people’s thoughts and online experiences by prioritizing certain content.

So how does algorithmic bias arise?

People often think that algorithms belong to machines, are not emotional, neutral, relatively fair, but it is not, algorithms are in fact human beings in the guise of machines, but the public often ignores this point.

“Part of the challenge of understanding algorithmic repression is understanding that the mathematical formulas that drive automated decisions are made by humans. While we often think of terms like “big data” and “algorithms” as being benign, neutral, or objective, they are anything but.” —Safiya Umoja Noble

As artificial products, algorithms inevitably carry human mind, which is a model trained on existing data and knowledge, it mirrors and reproduces society. So, to realize this is to understand why algorithmic bias exists. But we also need to understand how algorithms work in order to quickly recognize the effects of algorithmic bias, and we often don’t really understand algorithms, yet we often fail to truly understand algorithms. In Fairness and Abstraction in Sociotechnical Systems, the authors mention that the first lessons in computer science teach students that systems can be described as black boxes, precisely defined by their inputs, outputs, and the relationships between them (Selbst, Boyd et al. 2019). Although we can generalize the operation mechanism of the algorithm, that is, people first input massive data to the computer, generate instructions, and the machine comes up with a solution to a specific problem based on the written model, i.e. outputs the result. But it is almost impossible for us to grasp how they work, e.g. what kind of data is being imported, what is the filtering mechanism, etc. Pasquale (2015) says algorithm is like a black box: opaque, complex, and hard for non-experts to understand (Flew, 2021). So it is not known whether biased information is already imported at the time of input, or whether the algorithm obtains biased information during its operation.

Based on the above analysis, we can conclude that the causes of algorithm prejudice can be broadly categorised into three types.

The first is data bias. Data is the cornerstone of the algorithm, and once the data is biased at the point of import into the production process, then there will be input errors resulting in output errors. For instance, subjective preferences are injected during manual input, some data itself is discriminatory but not detected, or some data is insufficient or lost, all of which can result in the presentation of algorithmic bias in the results.

Governance by algorithms: reality construction by algorithmic selection on the Internet (Just and Latzer, 2017) mentioned that the statistical laws in language corpora encode known social biases in word embeddings (e.g. the word vector for family is closer to the vector for women than for men). The importation of these unconscious but biased corpora result in bias.

The second is algorithmic model bias. Once we have identified the goal to be computed or predicted, we usually use learning algorithms to analyze existing historical examples and mine them for usable patterns. This also means that all machine learning algorithms produce some data that reflects historical data. It mirrors our past, reflecting our background, purpose, or other information, and is constantly adjusted over time. Therefore, when there is some kind of bias in the historical data, the generated algorithmic model will usually reflect them (Danks & London, 2017; Mitchell et al., 2021). Going back to the example of content difference between male and female users mentioned at the beginning of my article, the algorithm model is prone to bias specific features in the learning process, such as recommending car and political related content to male users under the algorithm model, while giving priority to clothing, cooking and other content to female users.

The third is process bias. The algorithm is not unchanged. It will be updated. In the actual application of the algorithm, it will face user feedback. That is, the algorithm will learn user habits, speech, etc. and then return to the algorithm system for another output, and this output will become the input data of the next cycle. Let me give you an example. Back in 2016, Microsoft launched Tay, an experimental AI chatbot. But Tay was shut down a day later for his obscene and inflammatory tweets, as he became a bigoted extremist after accepting and learning other people’s racial slurs and other ideas from his interactions with Twitter users (Neff & Nagy, 2016). Therefore, the algorithm bias re-learns and updates the data in the cycle of human-computer interaction, which will also reinforces the original social bias.

Formation of filter bubbles

What are filter bubbles? Eli Pariser (2011) proposed the concept of “filter bubble” in 2011. He believes that filter bubble is based on big data and algorithms, generates user portraits according to user habits, usage time, etc., thus filtering impurity information and presenting personalized content to users through algorithms. This kind of personal network information space formed after information filtering is called “filter bubble”. He also raises the concern that this phenomenon could hinder the exchange of diverse views or even tear apart social consensus. This is a good illustration of the phenomenon raised at the beginning of the article, male and female users have different ideas, so that the algorithm generates completely different content when personalized recommendation, making the two genders form their own circles of thought and continue to deepen the sense of identity through comments that share the same ideas as their own. However, if sensitive ideas are involved, such “filter bubbles” are likely to bring about group antagonism and social division.

The relationship between filter bubbles and algorithmic bias

It may be said that filter bubbles and algorithmic bias influence each other, but if you look at the initial point, it is the algorithm that bring the filter bubble phenomenon and the algorithmic bias that deepens the filter bubble effect. The basis of the operation of the platform is the algorithm, and the essence of the algorithm is data analysis, which will filter the information according to the collected information or what we called personalized push.

As we all know, the algorithms of search engines and social media platforms usually recommend content according to the user’s network behavior habits and preferences. If the user clicks, shares certain content or interacts with certain information, the algorithm will infer that the user is interested in such content and continue to recommend similar information to the user. This filtering of information creates a user-friendly space for users, but at the same time makes it possible to receive only part of the content. It can improve the user experience in some cases, but for discussions of specific or sensitive topics, this way of working can exacerbate the separation between filter bubbles and people’s opinions, because users will only see information that matches their existing opinions, thus reinforcing biases. Algorithms constitute a form of what Adrienne Massanari (2017) calls “platform politics,” where “design, policy, and norms… The collection encourages certain types of cultures and behaviors to merge on the platform, while implicitly discouraging others (Flew, 2021). And the algorithms operate in opaque black boxes, with algorithmic bias arising from the algorithmic mechanisms of each platform. Social media platforms, search and recommendation engines influence what everyday users see and don’t see (Bozdag, 2013) . The presence of these biases makes content recommendations significantly biased. Thus, in the process of content distribution, information filtering and personalised recommendations form a “filter bubble” that makes users ignore the existence of opposing views to their own, so that once ideas collide, it is a horrible polemic.

Algorithmic bias and filter bubble is dividing us

In Regulating Platforms, Flew (2021) mentions some of the challenges that algorithms pose in shaping individuals and society, he also mentions a question about filter bubble, “Could machine learning generated using online websites bias the machine toward people and content that reinforce existing beliefs and away from people and content that deviate from existing beliefs (Bruns, 2019; Dubois and Blank, 2018; Pariser, 2012)?” If you ask me to answer, my answer is yes. In filter bubbles, individuals are generally more inclined to engage with and accept sources of information that are consistent with their existing ideas and opinions, while ignoring or doubting information that contradicts them. When filter bubbles design a space for people to fully stand in their own shoes, it strengthens their confidence in their existing opinions and further solidifies their beliefs. Users also interact with their “peers”, such as give likes or leave a comment. When this interaction is captured by the algorithm, users will continue to receive recommendations with similar information. This feedback loop allows individuals to immerse themselves more and more deeply in information that is consistent with their views. When it comes to important issues such as society and politics, people who hold the same views are overwhelmingly dominated, and machines learn the big new data and recommend it to more users, so more people begin to assimilate, but opinions that contradict existing opinions will be less and less exposed. So internet algorithms are unwittingly messing with our perspectives and we need to hold our own thoughts.

Strategies to reduce algorithmic bias

Individuals need to learn to think critically. When we realise that we are being manipulated by algorithms, we should take the initiative to step outside of our algorithmic bubble and try to reach out to new perspectives and respect the existence of alternative viewpoints. For example, you may not be immune to algorithmic bias at this point, but you can realise that, so the user setting gender of yours is female, then, you can try looking up information on a male account and get to know a new interface.

Mechanism transparency. Increased transparency can inform the general public about the impact of algorithms, while helping platforms or companies identify biased data. But Google often uses the fact that some of the information is commercially confidential to prevent outside knowledge of its internal workings. (Flew, 2021). It is the responsibility of the platform or company to be socially responsible, and the power of just one party’s efforts is minimal.

legal regulation. Only with clear attribution of responsibility when algorithmic bias issues arise can other platforms or companies be effectively managed. Some countries have already made legal attempts. Us lawmakers have introduced a bill that would require large companies to conduct audits of machine learning-powered systems, such as facial recognition or AD targeting algorithms, to detect bias. (Robertson, 2019). But much remains to be done. It is urgent to formulate relevant regulations.

Conclusion

Algorithms may seem neutral but they are actually full of algorithmic bias. The filtering bubbles caused by algorithmic bias manipulate what we prioritise and amplify certain voices whilst marginalising others, which, if we don’t look closely enough, will unknowingly shape us, narrow our information, and divide people even society. So we urgently need some ideas and strategies to reduce the adverse effects of algorithmic bias, and try our best to reduce the algorithmic bias fundamentally. This needs to be promoted by many parties, including individuals, platforms and governments. One is to call on the public to take the initiative to accept more diverse cultures and messages. One is to increase transparency so that users and regulators can better scrutinize. Then there is enacting laws and regulations. The government needs to bring together a diverse group of academics to introduce a law that clearly defines who is responsible and how to define the scope of “bias”. Regulation of algorithms is a long way to go.

Reference

Bozdag, E. (2013). Bias in algorithmic filtering and personalization. Ethics and information technology, 15, 209-227.

Danks, D., & London, A. J. (2017). Algorithmic Bias in Autonomous Systems. Ijcai,

Flew T. Issues of Concern. In: Regulating Platforms . Polity; 2021:79-86.

Fazelpour, S., & Danks, D. (2021). Algorithmic bias: Senses, sources, solutions. Philosophy Compass, 16(8), e12760.

Just, N., & Latzer, M. (2017). Governance by algorithms: reality construction by algorithmic selection on the Internet. Media, culture & society, 39(2), 238-258.

Mahdawi, A. (2013). Google’s autocomplete spells out our darkest thoughts. The Guardian, 22.

Noble, S. U. (2018). Algorithms of oppression : how search engines reinforce racism . New York University Press.

Neff, G. (2016). Talking to bots: Symbiotic agency and the case of Tay. International Journal of Communication.

Pariser, E. (2011). The filter bubble: What the Internet is hiding from you. penguin UK.

Selbst, A. D., Boyd, D., Friedler, S. A., Venkatasubramanian, S., & Vertesi, J. (2019). Fairness and Abstraction in Sociotechnical Systems Proceedings of the Conference on Fairness, Accountability, and Transparency, Atlanta, GA, USA. https://doi.org/10.1145/3287560.3287598

Women, U. (2013). UN Women ad series reveals widespread sexism. Un Women, 21.

Image

Aksharadhool: The new digital divide For those readers, who feel more comfortable with numbers, here are the facts for I… | Catholic theology, Theology, Catholic. (n.d.). Pinterest. Retrieved April 10, 2024, from https://www.pinterest.com.au/pin/16677461093043778/

Algorithms Learn Our Workplace Biases. Can They Help Us Unlearn Them? (Published 2020). (n.d.). Pinterest. Retrieved April 10, 2024, from https://www.pinterest.com.au/pin/518125132141757915/

Discover Trendy DIY Home Decor Ideas. (n.d.). Pinterest. Retrieved April 10, 2024, from https://www.pinterest.com.au/pin/1148840186180390670/

Mahdawi, A. (2013). Google’s autocomplete spells out our darkest thoughts. The Guardian, 22.

Measuring the Filter Bubble: How Google is influencing what you click | Afiches, Examen. (n.d.). Pinterest. Retrieved April 10, 2024, from https://www.pinterest.com.au/pin/857091372849076807/

Be the first to comment