In this era of increasingly powerful algorithms, do you feel like you’re being monitored by big data? When we share our opinions online, post a photo, or talk to our friends, who hears our voices and controls the information we receive? On social platforms, we interact with an invisible force every day — recommendation algorithms. More than 70% of what is watched on YouTube and Netflix is based on recommendation algorithms (Liu & Cong, 2023). These algorithms act as manipulators behind the scenes, determining what information we may receive, influencing our opinions, and even reshaping our behavior. But how do these algorithms work? And how do they affect our daily lives and society?

What are recommendation algorithms

Recommendation algorithms on social platforms are a complex set of mechanisms for deciding what content to show to users. On social platforms, algorithms are a series of computational programs designed to analyze user data, including their interaction behavior with others, personal preferences, historical using data, similarity to other users, and so on, in order to predict what users are likely to be interested in. This may seem not surprising. However, in other words, the platform even collects information about when users open a certain kind of article, how long they spend reading certain types of content, whether they tend to engage in certain content formats (e.g., pictures, videos, or text), how fast they watch a certain video, where they pause, and which clips they watch over and over again (Vidal Bustamante, 2022). This kind of prediction is not only based on individual historical data but also includes behavioral patterns from a broad user base.

Through algorithm technology, the browsing preferences and tendencies of users can be predicted, and personalized accurate content delivery can be achieved. This approach, compared with traditional media, is more accurate and efficient to continuously attract users’ attention. At the same time, for individuals, in this era of information overload, this behavior of the platform does bring more efficient and accurate information matching for users (Xu, 2022). This kind of algorithm meets the needs of users in the fast-paced era with the least time cost to obtain personalized information choices.

Although the most immediate use of recommendation algorithms seems to be for users to manage the content they see, however, ultimately these algorithms are a key tool for companies to drive and keep users engaged (Vidal Bustamante, 2022). Recommendation algorithms increase users’ time spent on the platform by showing content that matches their personal preferences. The more users interact with the content on the platform, the more effectively the platform can “commoditize” the user’s attention and thus target advertising.

TikTok’s “For You” algorithm

Narayanan (2023) mentioned that TikTok is more recently showing the power of an almost purely algorithm-driven platform. While the exact workings of this algorithm are kept secret by ByteDance, the basic mechanism of the “For You” algorithm goes like this: gathering data from users’ historical interactions, video information, device and account settings to show the most relevant videos to users’ interests (Latermedia, 2020).

In a survey conducted by Bhandari and Bimo (2022), participants said the accuracy of the algorithm was the reason they were initially interested in TikTok and continued to use it. Other participants said that when they first encountered TikTok, the content was richer, allowing them to be exposed to a lot of interesting and different things. But as the algorithm gets to know them, the content gets more curated which becomes a little annoying. They don’t know how to get out of seeing the same type of content over and over again. In addition, in a survey conducted by Luria (2023), more participants expressed their concerns about the opacity of platform recommendation algorithms and the practice of platforms collecting personal information, and they want to know what they did see and why they could see such kind of content.

These concerns of users do reveal some problems behind the recommendation algorithm.

Problems behind the recommendation algorithms

Many users have realized that a big problem behind the recommendation algorithms is the issue of privacy and security. The platform collects user data but does not disclose the processing and use of the data. In addition, due to the secrecy of social platforms for algorithms, the algorithm is also like a “black box”. But the more serious and possibly unrecognized harm behind this is that under the growing influence of the human-algorithmic interface, human behavior is reshaping by the algorithms (Flew, 2021).

Filter Bubbles

Filter bubble describe the potential for online personalized algorithms to effectively isolate people from a diversity of viewpoints or content and a recommendation system could cause such kind of phenomenon (Nguyen et al., 2014). Recommendation algorithms let people only see the content that interests them, and those who disagree with them are excluded. If a user rarely interacts with someone or if someone’s attitude is very different from the views of the users, then these contents will all be hidden by the platform (Nicholas, 2022). Based on the information and behavior that users retain on the platform, the platform separates users from information that disagrees with their views, effectively isolating them in their own cultural or ideological bubble, resulting in a limited and customized view of the world which will lead to the narrowing of individual vision and the solidification of thinking.

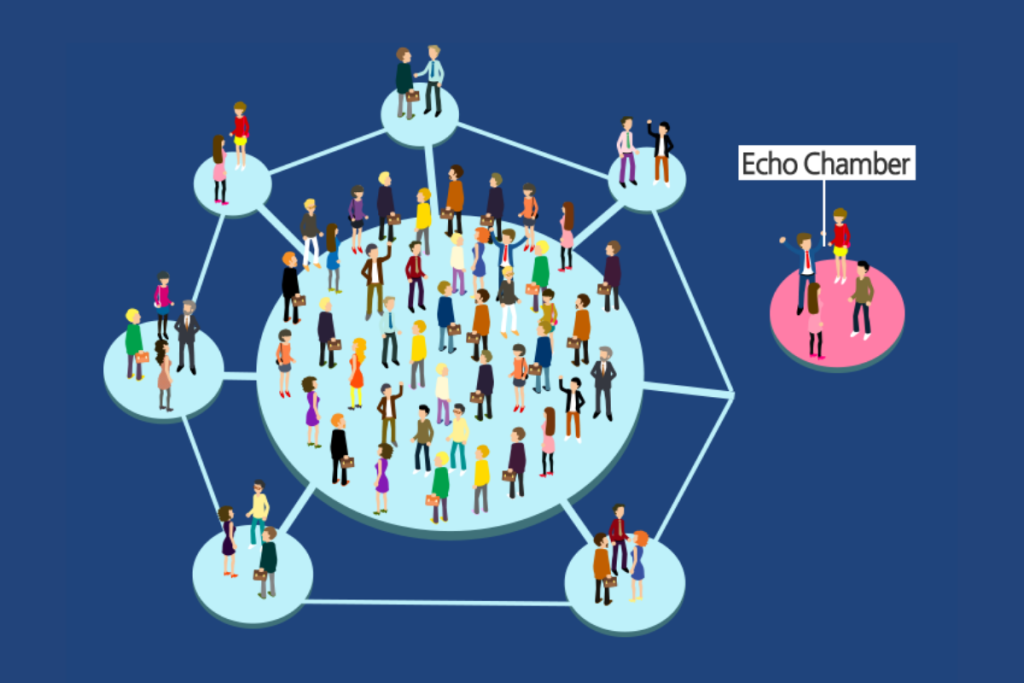

Echo Chamber Effect

If the narrowing of personal information horizon is the social impact of recommendation algorithms on individuals, then the echo chamber effect is the social impact of recommendation algorithms on groups (Hu, 2019). Recommendation algorithms have strengthened the formation of the “echo chamber” effect which means users will communicate and repeat behaviors in a closed system that reinforces their preexisting beliefs and are unwilling to accept refutation. Before the emergence of recommendation algorithms, people need to actively search for information or join interested network communities, but with the help of recommendation algorithms, people can easily obtain the same information and find people with similar interests. Some active users post opinions to attract the attention of homogenous people, and due to group pressure, these groups believe in only what they think is right, and reject different opinions and attitudes (Xu, 2022). For example, on some social platforms, the comments under the WeChat public account are often a collection of comments supporting the views of the publisher, and comments on Weibo that differed from the original blogger were countered by the blogger’s followers. In this case, they will eliminate different voices, leading to an increasingly paranoid and polarized understanding of a certain type of issue.

Profit-driven platforms design their platforms in an addictive way

By constantly updating the algorithms, it is possible to speculate and judge the viewing preferences of users more and more accurately, so as to achieve the continuous growth of the number of users. By keeping users’ attention on the platform, these platforms can increase the frequency of their use of the platforms in exchange for the favor of advertisers, which is the main way for these social platforms to make money. Advertisers can use the information to serve you more advertisements or content, especially if those advertisements and content make you “like” and endlessly browse your social media (Cocchiarella, 2021). Narayanan (2023) argues that while algorithms themselves are not harmful, the current focus on optimizing user engagement can have a negative impact. Strongly driven by the “attention economy” business model, social platforms often design their platforms in a way that is addictive.

The extremely personalized recommendation is not only designed to meet the needs of users but also to make users addicted as the ultimate goal. Some platforms attract users’ attention by pushing carefully selected extreme or fringe content (such as violence, bullying, crimes, etc.), or by pushing eye-catching fake news because such content tends to inspire more user interaction (Vidal Bustamante, 2022). This not only encourages the spread of misinformation but may also fuel the polarization of society. In addition, there are recommendations for specific groups of people. When the platform, based on your personal information and historical behavior, guesses that you belong to some groups with poor self-control or psychological vulnerabilities, they will seize your psychological characteristics to send some content to you and label you as “easy to manipulate”.

Governance for social platforms using recommendation algorithms

As the application field of algorithms such as recommendation algorithms continues to expand, allowing them to develop in a disorderly manner will further amplify the possible risks and harms. As a result, in addition to promoting the progress of algorithm-related technologies, we should also strengthen the regulation of these platforms and the governance of algorithm dilemmas to achieve a balance between technological development and social security (Xu, 2022).

Platforms should allow users to set and adjust their content preferences in more detail, including opting out of certain types of recommendations. And provide effective feedback channels for users, so that the algorithm can be adjusted according to the direct feedback of users.

The key to the algorithm’s “black box” is that the algorithm operation process is difficult to be observed and difficult to be understood by the public. Algorithm transparency requires the algorithm designer to disclose the basic logic of the algorithm from input to output, directly and concisely explain the algorithm processing process, so as to open the algorithm’s “black box” and alleviate the information imbalance between the users and the algorithm designer. Algorithm transparency is not the pursuit of complete disclosure of algorithms since algorithms are confidential documents for many companies like TikTok and Weibo (Xu, 2022). It requires these companies to explain the algorithms in a way that can be better understood by the public, eliminating the rule-blind area brought by the “black box”. The regulations should clarify the interpretation obligation of algorithm and improve the transparency of algorithms.

In addition to improving the laws and regulations related to the algorithms, the legal responsibility of the algorithm subject can also be investigated through the way of post-accountability. With the restraint mechanism formed by accountability, the algorithm subject is guided to make the right choice when facing the problem of technical value. At the same time, the concept of platform governance triangle is referred to to achieve multi-party joint governance (Flew, 2021). Under this circumstance, relevant state departments, third-party regulators and platform entities themselves are obliged to make their own efforts to address potential negative impacts.

As users, what can we do to avoid such kind of manipulation

First of all, we can make use of the tools provided by the platform to adjust our content recommendation preferences and consciously discuss the impact of the algorithms to give feedback to the platforms. Meanwhile, we must improve the ability to access information, learn how to identify and evaluate the quality of information and enhance the detection of misinformation. But the most important thing is that we need to move from being forced to receive information to actively searching for information. By searching for multiple aspects of content, we can change the content recommendations of the recommendation algorithms and get back the active choice of information thus breaking the bubble of information constructed by the algorithms.

Conclusion

Algorithms are not completely neutral technologies but are essentially tools for platforms to achieve user engagement and content monetization. The principle of the algorithm’s accurate push is to approach the user’s preferences infinitely on the basis of collecting user data, so as to improve the user’s use time and use dependence. The average user often finds it difficult to resist such preference-based feeding. As users, we need to be vigilant, promote more open discussion about the impact of algorithms, and push platforms to be more transparent and accountable as conscious and selective consumers of information.

References

Bhandari, A., & Bimo, S. (2022). Why’s Everyone on TikTok Now? The Algorithmized Self and the Future of Self-Making on Social Media. Social Media + Society, 8(1). https://doi.org/10.1177/20563051221086241

Cocchiarella, C. (2021). Manipulative algorithms and addictive design on social media. MINDFUL TECHNICS. https://mindfultechnics.com/manipulative-algorithms-and-addictive-design-summing-up-whats-wrong-with-social-media/

Flew T. (2021). Platform Regulation and Governance. In: Regulating Platforms . Polity.

Hu, Q. (2019). Social reflection based on algorithmic recommendations: individual dilemmas, group polarization, and media publicity, Media People. Available at: http://media.people.com.cn/n1/2019/1225/c431262-31522701.html (Accessed: 13 April 2024).

Latermedia. (2020). This is how the TikTok algorithm works. Later Blog. https://later.com/blog/tiktok-algorithm/

Liu, J., & Cong, Z. (2023). The Daily Me Versus the Daily Others: How Do Recommendation Algorithms Change User Interests? Evidence from a Knowledge-Sharing Platform. Journal of Marketing Research, 60(4), 767-791. https://doi.org/10.1177/00222437221134237

Luria, M. (2023, June 14). “This is Transparency to Me” User Insights into Recommendation Algorithm Reporting. https://doi.org/10.31219/osf.io/qfcpx

Narayanan, A. (2023). Understanding social media recommendation algorithms, Academic Commons. Available at: https://academiccommons.columbia.edu/doi/10.7916/khdk-m460 (Accessed: 13 April 2024).

Nguyen, T. T., Hui, P.-M., Harper, F. M., Terveen, L., & Konstan, J. A. (2014, April 1). Exploring the filter bubble: Proceedings of the 23rd International Conference on World Wide Web. ACM Other conferences. https://dl.acm.org/doi/abs/10.1145/2566486.2568012?casa_token=pKgFBoYCApsAAAAA%3Atjq04qzQqKfswJKey_pKkw4Go35KLHNT9vFg95joVoy8JOMAsRWzcGOE45Y88rRrhP_1W9aDZPWotg

Nicholas, G. (2022). Shedding light on Shadowbanning, Center for Democracy & Technology. Available at: https://cdt.org/wp-content/uploads/2022/04/remediated-final-shadowbanning-final-050322-upd-ref.pdf (Accessed: 12 April 2024).

Vidal Bustamante, C. M. (2022). Technology primer: Social Media Recommendation Algorithms. Belfer Center for Science and International Affairs. https://www.belfercenter.org/publication/technology-primer-social-media-recommendation-algorithms

Xu, J. (2022). Analysis of Social Media Algorithm Recommendation System. Studies in social science & humanities, 1(3), 57–63. Retrieved from https://www.paradigmpress.org/SSSH/article/view/229

Xu, Z. (2022). From algorithmic governance to govern algorithm. AI & Soc. https://doi.org/10.1007/s00146-022-01554-4

Be the first to comment