Do you think your smart home devices are listening to you? Have you ever received an ad on your phone hours after you just talked about it with your friends over dinner? Then you are not alone.

Some 43 per cent of American smart device owners believe their device is listening and recording them without their permission. They think this way due to advertisers’ ability to show individual advertisements for topics they were just discussing privately (Molla, 2019).

Smart home devices such as Amazon Alexa, Google Home, and Apple HomePod have become common household items with millions of devices being sold globally. With these smart electronics, you can manage anything from playing music to setting up home security and shopping using simple voice commands. As these gadgets grow mainstream, privacy issues have arisen. Are these devices constantly listening? If so, how can you protect your privacy?

This blog post delves into how smart gadgets work, discusses the privacy problems and case studies surrounding them, and provides recommendations on how you can protect your privacy. This article concludes that smart home devices will always be listening to you and use your data to process the information and improve its performance. Smart home device users will always have to trade off to a certain extent of their privacy to enjoy the conveniences of smart home devices, but users can be tactful in how they use these gadgets depends on how much they can withstand their data being leaked.

How Does a Smart Home Device Work

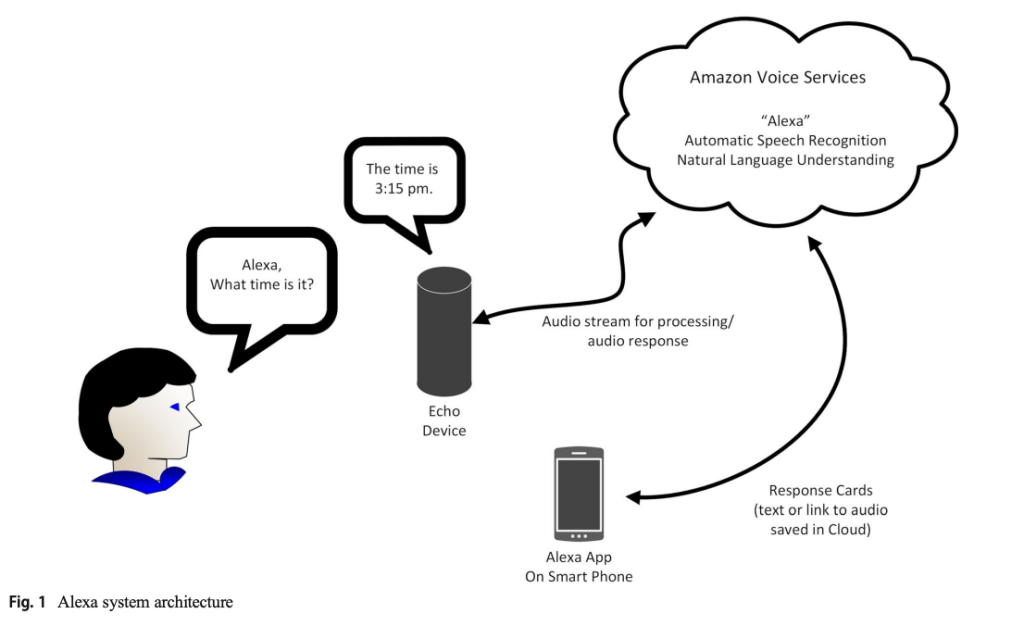

Smart devices like Amazon Alexa and Google Home rely on voice recognition technologies to function. These gadgets are designed to be always ready to listen to any voice command, waiting on standby for any wake-up word trigger such as “Hey Alexa”, “Hey Google”, or “Hey Siri”. When the device recognises the trigger word, it activates and starts processing the voice command. This procedure involves transmitting your recorded speech data to the cloud for additional analysis and response generation based on your request. (Ford & Palmer, 2019)

By uploading your data to the cloud, these devices can enhance their performance over time, becoming more efficient at recognising user preferences and accents. However, increased reliance on cloud storage raises concerns regarding the amount of data collected. Who has access to it? And how long it is retained? As user, you may not completely understand how much of your personal information is shared or preserved (Ford & Palmer, 2018; Molla, 2019).

The Privacy Concerns of Smart Home Devices

When you use smart home devices, you are facing three privacy issues including the limited option to opt out of privacy-invading features, how your stored data is processed, and how they are used. First, the ability to opt out of these data-driven platforms is becoming increasingly difficult. As you use them for your daily needs, the algorithms of these devices are consuming your data, including your habits and preferences to feed their machine-learning systems to provide recommendations from advertising, and news, to shopping choices (Marwick & Boyd, 2018). These companies have a tremendous incentive to control the information each of us sees; if they can continue to show us relevant content that we appreciate, we will stay longer and participate more (Suzor, 2019).

Secondly, the gathered data is not simply destroyed once the device has completed the task. Rather, it is frequently saved on cloud servers and analysed to improve the tool’s functioning. Companies such as Amazon and Google retain access to this information and use it to train algorithms that increase device accuracy and personalisation. This means that users’ voice commands and any surrounding discussions could be saved for a lengthy period whether users are aware of how their data is being used (Clauser, 2019; Molla, 2019).

The third privacy concern is how your stored data is used. The integration of third-party services into devices like Alexa and Google Home intensifies these problems. Many of these devices integrate with a variety of third-party applications, including music streaming platforms, shopping sites, and home automation systems, resulting in increased data sharing and storage, frequently without clear user authorisation (Lau et al., 2018; Ford & Palmer, 2018). The following case study section will address these privacy concerns.

Case Study: Amazon Alexa Privacy Controversy

Almost every digital platform company has faced privacy controversies. What concerning about smart home devices is that people are not aware of their privacy violating features like people do with smartphones. Amazon’s Alexa, one of the most popular smart home devices, has been the subject of multiple high-profile privacy concerns. Such occurrences highlight the dangers of having devices that are continually listening and storing data without proper protection (Lynskey, 2019).

Amazon customer in Germany was mistakenly sent 1,700 audio files of someone else’s private conversation on Amazon Echo, which gave enough information to name and locate the unlucky user and his girlfriend. Amazon said this unfortunate mishap was a human error (Lynskey, 2019)

In a well-publicized incident, Alexa mistakenly sent a couple’s private discussion to one of their contacts. When an Amazon customer requested to review his data in August 2019 under a European Union data protection law. Amazon sent him a download link to tracked searches on the website — and 1,700 audio recordings by Alexa we sent to him, but it was from a stranger he had no clue about. Similarly, a couple in Oregon found that their Amazon Echo sent their conversation to the husband’s employee back in May. A North Carolina man said last year that his Echo recorded a discussion and then sent it to his insurance agent (Ingber, 2018).

Even more controversies were on the human evaluation of the voice recordings. In 2019, it was discovered that Amazon employees were listening to private Alexa recordings to improve the device’s accuracy. This method, although claiming to improve the quality of Alexa’s responses, created serious ethical concerns because consumers were unaware that their conversations were being monitored by human workers. Google Assistant has had similar issues. In 2019, it was reported that Google contractors were analysing user-made voice recordings. This practice sparked outrage from users who were concerned about the lack of transparency (Clauser, 2019).

In the same year, Bloomberg, the Guardian, Vice News, and the Belgian news station VRT disclosed that all five of the major players (Facebook, Google, Amazon, Apple, and Microsoft) used human contractors to evaluate a small fraction of voice-assistant recordings. Although the recordings are anonymous, they frequently contain enough information to identify or shame the user, especially if what they overhear is personal medical information or an unintended sex tape (Lynskey, 2019). Such lack of transparency and security leads to discussions over the legal and ethical implications of such invasive features of smart home devices.

Legal and Ethical Implications

The legal and ethical consequences of these actions are crucial for users’ privacy protection. The right of privacy should be protected as a guarantor of physical security, non-discrimination based on one’s medical condition, or sexual orientation, and freedom from uninformed invasion (Flew, 2021). Yet, as these companies routinely fail to protect personal data, the distinction between choice and obligation becomes increasingly blurred. Instead, what matters most is the user’s level of privilege and their ability to withstand a data breach (Marwick & Boyd, 2018).

Privacy varies within communities as well as across large national and language-cultural divides as minorities have different experiences with privacy than those with higher privilege. In a particular society, whole classes of people who have been structurally and systematically marginalized—such as members of religious minorities, people of colour, immigrants, low-income communities, people with disabilities, youth and elders, and LGBTQ communities, had to be extra careful when it comes to their privacy (Marwick & Boyd, 2018).

In response to these growing concerns, numerous countries have passed or proposed data privacy legislation. In the European Union, the General Data Protection Regulation (GDPR) mandates that businesses disclose what data they collect, why they collect it, and how long they keep it. The GDPR also grants users the right to view, amend, and erase their personal information (Goggin et al., 2017; Komaitis, 2018). On the other hand, Australia’s Privacy Act only generally establish requirements on organisations when collecting, handling, storing, using, and disclosing personal information, and certain rights for individuals to access and amend personal information (Goggin et al., 2017)

Despite these rules, smart device manufacturers have faced criticism for failing to fully notify users about how their data is utilised. Even when consumers agree to data gathering, many are unaware of the extent to which their information is collected and analysed. This lack of openness raises a serious ethical concern: customers may not be properly informed about the privacy concerns they face when using these gadgets (Lau et al., 2018). Hence, when users use smart home devices, they will have to face with certain level of trade-off.

The Trade-off Between Convenience and Privacy

Privacy issues take on new significance in the internet environment due to the volume of information that can be provided. When you are using these devices, you trade-off between privacy rights and access to free online services, and the potential for commercial interests and government agencies to use your data without consent (Flew, 2021). Every consumer must choose between access to new technologies and personal data protection.

Aside from concerns about the exploitation of personal data, several researchers have found the concept of a privacy trade-off unacceptable for a variety of reasons. Online terms of service agreements are complex, ambiguous, and the users are given an all-or-nothing choice (Suzor, 2019). This means that it is extremely difficult to provide either informed consent, as these terms are difficult to understand and are susceptible to change without notice to users, or free consent, as there is little way of using the service without consenting to the terms of use (Flew, 2021).

Smart technologies can improve our lives in a variety of ways, but as consumers, we must balance the hazards of data collecting with the benefits of these products. Users must maintain control over their privacy settings, remove voice histories on a regular basis, and keep knowledgeable about how firms utilise their data. There is a need for filtering and preference controls to reduce the amount of information needed by the voice assistant to provide services (Tabassum et al., 2019).

How to Protect Your Privacy

Given the privacy concerns associated with smart devices, it is important for consumers to take proactive steps to protect their personal information while still enjoying the benefits these devices offer. Here are a few recommendations for you as users:

- Adjust Privacy Settings: Both Amazon and Google provide privacy settings that allow users to manage their voice history. For example, users can opt to delete their voice recordings regularly or turn off the feature that stores voice data (Clauser, 2019; Tabassum et al., 2019)

- Use the Mute Button: Every smart device comes with a mute button that physically disconnects the microphone. This is a simple way to ensure that the device isn’t listening when you don’t want it to (Lau et al., 2018; Ford & Palmer, 2018)

- Delete Voice History Regularly: Amazon and Google offer tools to delete voice recordings from the cloud. Regularly deleting these recordings reduces the amount of data that is stored and reduces the risk of sensitive information being accessed (Molla, 2019; Tabassum et al., 2019).

- Consider Privacy-Focused Alternatives: If privacy is a top priority, consider alternatives. Unlike Amazon’s messy and lengthy policy pages, both Microsoft and Samsung devices provided a single, succinct page with all the necessary information (Rediger, 2017)

- Educate Yourself: Understanding the privacy policies of the devices you use is crucial. Familiarize yourself with the data collection practices and review the permissions granted to third-party services. Being proactive about these details can help users make more informed decisions about their smart device usage (Lau et al., 2018).

As smart devices become more popular, the privacy issues associated with them will only increase. While these devices are convenient, they raise serious concerns about data ownership, transparency, and the possible exploitation of personal information. Users can continue to profit from smart technologies without compromising their personal data by knowing how they work and making proactive efforts to protect their privacy.

The question of whether these devices are always listening is more complicated than a simple yes or no response. These devices are listening in a way that permits them to work, but what matters most is how much data they keep and use. As customers, it is our obligation to stay informed, preserve our privacy, and hold businesses accountable for how they manage our data.

References

Clauser, G. (2019, August 8). Amazon’s Alexa Never Stops Listening to You. Should You Worry. The New York Times. https://www.nytimes.com/wirecutter/blog/amazons-alexa-never-stops-listening-to-you/

Flew, T. (2021). Issues of Concern. In T. Flew, Regulating platforms (pp. 72–79). Polity.

Ford, M., & Palmer, W. (2019). Alexa, are you listening to me? An analysis of Alexa voice service network traffic. Personal and ubiquitous computing, 23, 67-79.

Goggin, G., Vromen, A., Weatherall, K., Martin, F., Adele, W., Sunman, L., & Bailo, F. (2017). Digital Rights in Australia. Sydney: University of Sydney. https://ses.library.usyd.edu.au/handle/2123/17587

Ingber, S. (2018, December 20). Amazon Customer Receives 1,700 Audio Files Of A Stranger Who Used Alexa. NPR. https://www.npr.org/2018/12/20/678631013/amazon-customer-receives-1-700-audio-files-of-a-stranger-who-used-alexa?t=1570014709519&t=1570530199090

Komaitis, K. (2018). GDPR: Going Beyond Borders. Internet Society, 25.

Lau, J., Zimmerman, B., & Schaub, F. (2018). Alexa, are you listening? Privacy perceptions, concerns and privacy-seeking behaviors with smart speakers. Proceedings of the ACM on human-computer interaction, 2(CSCW), 1-31.

Lynskey, D. (2019, October 9). ‘Alexa, are you invading my privacy?’ – the dark side of our voice assistants. The Guardian. https://www.theguardian.com/technology/2019/oct/09/alexa-are-you-invading-my-privacy-the-dark-side-of-our-voice-assistants

Marwick, A. E., & Boyd, D. (2018). Understanding Privacy at the Margins: Introduction. International Journal of Communication, 12, 1157–1165.

Molla, R. (2019, September 21). Your smart devices listening to you, explained. Vox. https://www.vox.com/recode/2019/9/20/20875755/smart-devices-listening-human-reviewers-portal-alexa-siri-assistant

Rediger, A. M. (2017). Always-Listening Technologies: Who Is Listening and What Can Be Done About It. Loy. Consumer L. Rev., 29, 229.

Suzor, N. P. (2019). Lawless: The Secret Rules That Govern Our Digital Lives. Cambridge University Press. https://doi.org/10.1017/9781108666428

Tabassum, M., Kosiński, T., Frik, A., Malkin, N., Wijesekera, P., Egelman, S., & Lipford, H. R. (2019). Investigating users’ preferences and expectations for always-listening voice assistants. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 3(4), 1-23.

Be the first to comment